Building a server-side application to manage the secure generation, storage and retrieval of access tokens, with Linx (a low-code platform)

Background

When building integrations with the big 3rd-party service providers such as Google, GitHub or Microsoft, applications require a user to grant it permission to act on the user’s behalf, this involves the OAuth 2.0 Authorization Code Grant flow between a user, server-side app and the service provider’s authentication server.

This authorisation “dance” can be difficult to achieve initially. It can become frustrating and hinder your progress with integrations, especially when you want to build out your concepts. Furthermore, when developing integrations in teams, you need to share common access to many resources, which can become difficult with manual user authorisation of applications and the sharing of access.

Due to the above reasons, I was motivated to create a standard service that would handle the generation of these access tokens across the multiple 3rd-party systems that I integrate daily in my work with Linx. The goal was to create a centralised Linx “access token management service” that I could use across all my integrations.

This idea then morphed into creating a publicly available service that anyone can use to generate, store, and retrieve access tokens for use in development (and even production).

Application design and security

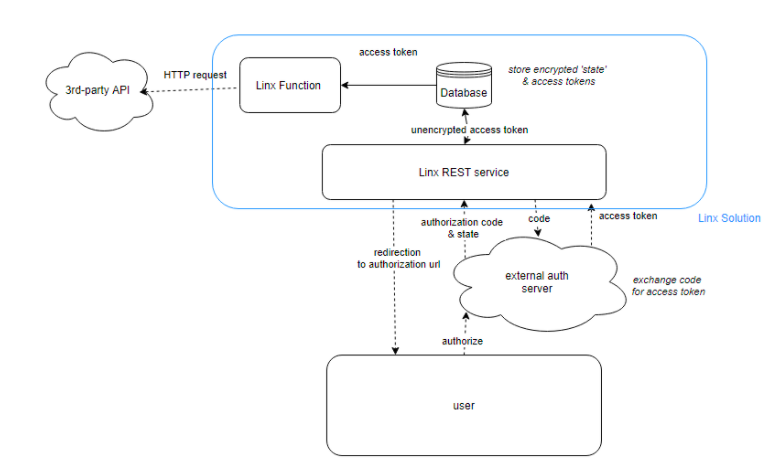

Before the final idea came to mind, I was implementing the following design to generate the access tokens:

My original design involved a REST service that would receive the callback request and exchange the code for a token. This token would then be written to a database unencrypted. The token would then be retrieved internally within the solution from the database and used in an HTTP request.

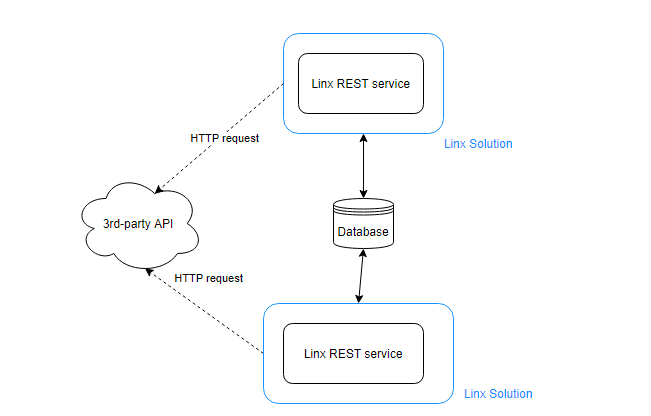

This design was implemented on a ‘per Solution’ basis, meaning that I implemented a separate REST service per integration; this isn’t too difficult to set up as you can copy and paste entire pieces of functionality with Linx. However, as the number of integrations increased, I needed a better way of using access tokens without re-authorizing a new app for each integration, so I decided to write the token to a common database. I could then just have a generic process that retrieves the token from the database.

With time I realised that it’s not the best design in terms of security, scaling as well as the effort of re-implementing a REST service per integration.

Implementing the services across the different Linx Solutions wasn’t difficult at all as they all followed the same OAuth 2.0 framework with only minor differences. I just copied and pasted entire authentication modules into each solution and tweaked them to the specific service providers needs. As easy as this was, it wasn’t best practice in terms of maintenance and usability.

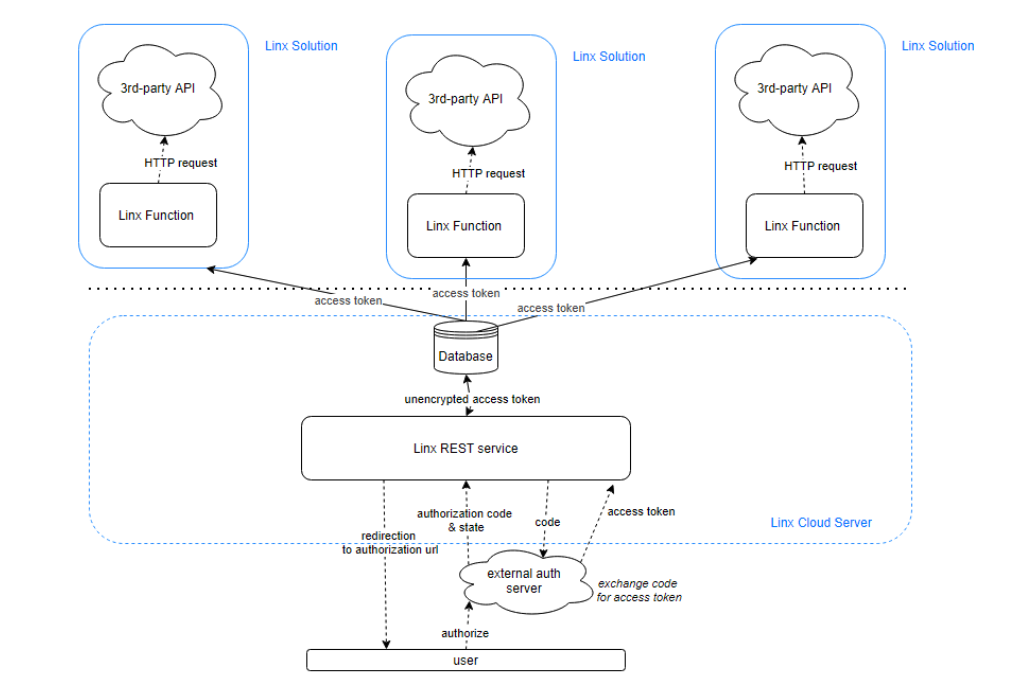

This led me to extend the idea of a central database to have a single generic web service that I could use to generate and manage the OAuth 2.0 process and the storage of tokens.

Customisations per service provider would still have to be completed, but this time only in a single place. I could then concentrate on streamlining the best design for all of the service providers’ nuances.

So what I came up with was exactly that, a single web service that:

– Generated a redirection URL and redirected the user-agent with the response

– Received the callback request after authorisation and validated the state

– Exchanged the authorisation code for an access token

– Stored the token in a database

This served me pretty well for my personal development projects. However, it became apparent that managing the different pieces of functionality would become an issue both in terms of unit testing, running servers and database connections and using multiple user accounts. In addition, all the services and functionality were contained in a single place which meant maintaining the solution and the various functionality became difficult.

Furthermore, recently while working on other projects as a team, we encountered issues with storing the tokens and giving people access to already authorised applications. 2FA is required to authenticate in many situations, and scenarios may arise where this is not feasible. In some cases, tokens would be overwritten without member’s knowledge or generated on separate systems, delaying development.

So from a usage and security point of view, this still didn’t cut it.

I then decided that we needed some form of key security applied to the service so that you were able to pass on and revoke access to specific access tokens. For example, this could mean that if an admin generates an API key, this API key can be distributed amongst the team members. Then, when re-authorization is required, the user can update the access token without re-distributing. Additionally, users can use keys to differentiate multiple service providers and restrict access without necessarily interacting with the service provider’s authorisation server.

The simplified version of this authentication service would be publicly accessible for external users to:

– Create API Keys which are then used for linking multiple access tokens.

– Use the API Key to retrieve the required access token and use it in an external application to authenticate requests made to the specific service provider.

Privacy and security approach

Now, this led me on to my next concern: ”How do I store confidential data in a way that even the admin of the system cannot use the tokens to gain access to protected resources”?

With this question and the Linx architecture in mind, I followed the below security design principles:

– Small services that achieve small tasks

– Isolate separate services

– Principle of least privilege

– Minimize the attack surface

– Layered approach

Service architecture

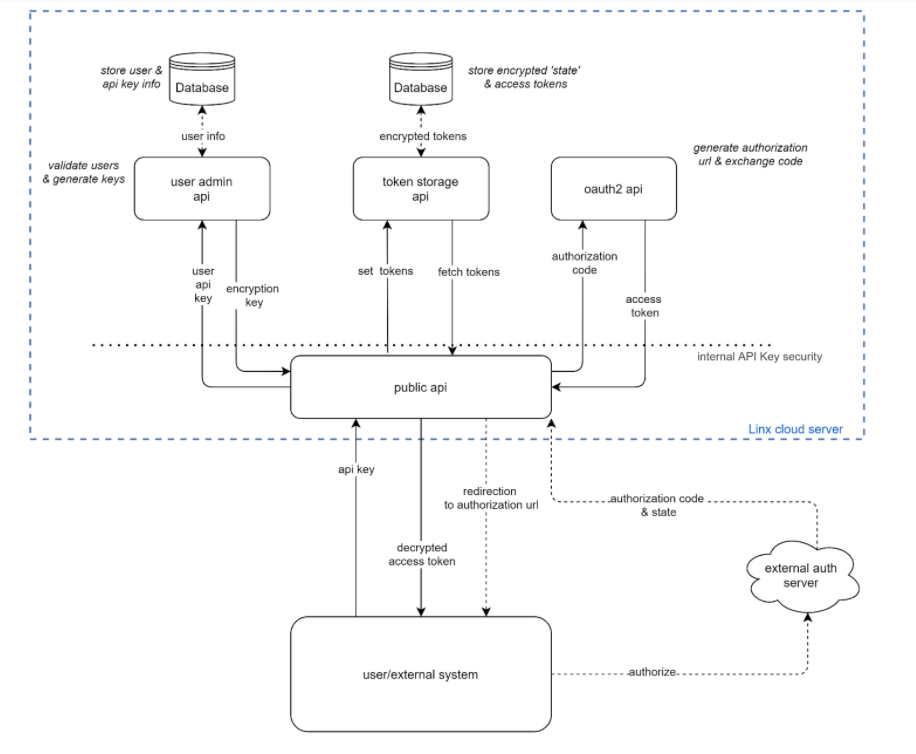

After iterating through several design choices which I could quickly try out in Linx to prove my concept, I ended up with this (this was all to built using Linx):

The system design consists of 4 separate REST web services hosted on a single Linx cloud server. In addition, there is a single public-facing API that acts as the intermediary between a user and the separate sub-services.

Each sub-services is also a REST API and has been designed to handle separate related pieces of functionality. The purpose of doing this was with maintenance and security in mind.

The idea here is that these sub-services can be further isolated and deployed to different servers, limiting the risk of complete breach. In addition, separating the encryption keys and API keys adds a further layer of security, even though any encryption and decryption functions only use values that are available at runtime and that the user only knows.

The ‘Public API’ is responsible for retrieving the different credentials from the separate data persistence layers and decrypting the relevant tokens.

By isolating the services into functional groups and separating the data persistence layer, these services can be maintained and extended with far more ease and allow greater control in terms of access restriction on the ‘Public API’ layer.

The built-in Linx plugins handle all the cryptography and HTTP functionality.

Public API

This is a Linx REST web service that exposes a public API for generating and retrieving access tokens.

This service acts as a “buffer” layer between the external world and hidden authentication services. This service does not persist any data and acts as a facilitator between the user and the different sub-services that persist data.

This service is responsible for the runtime encryption/decryption of access tokens and the sending and receiving this data to the relevant data storage services. Requests made to these subsystems are authenticated using an internal API Key. This is to first place a layer of security on the service between the public API and the sub-services. Secondly, when you want to split up the sub-services onto their own servers, this allows a simple way of applying security.

When a token retrieval request is received, a request is made to the ‘user admin API’ to validate the API Key. The user admin service responds with the encryption key associated with the service provider and API Key. This key data is passed through to the operation of the request and used to encrypt and decrypt the access tokens.

User administration service:

This is a Linx REST web service to manage and validate user-related credentials and API keys. This service is responsible for generating API Keys and encryption keys used for encrypting/decrypting the access tokens. This service is called from the ‘Public API’ before any request operation proceeds; it returns the key needed to encrypt/decrypt the access token.

Token storage service:

This service is responsible for the initial storage of the state and encryption key and subsequent storage and retrieval of access tokens. The idea here is that this is purely a database layer that stores and sends data without business logic.

OAuth service:

This is a generic standalone service to handle service provider authentication details and requests. This service constructs and returns an authorisation URL and manages the exchange of the authorisation code for each of the access tokens.

Process flow

I came up with the following flow keeping in mind that at no point was a credential value stored in plain text and any decryption/encryption needed to use runtime user-provided key values. All of the below was achieved using a low-code platform (Linx).

API Key registration:

Users can register for a new API Key using their login credentials. Once validated, a new user API Key is generated, and a corresponding encryption key is used for encrypting any access tokens associated with the API key. The API Key is then hashed, and the ‘encryption key’ is then encrypted with the plain text value of the API Key. This means that only with the original API Key (which is never stored on the server anywhere) can you decrypt the ‘encryption key’. Finally, the plain text value of the API Key is returned to the user to save for future use.

App authorisation:

To initiate the OAuth 2.0 Authorization Code Grant Flow, a request needs to be undertaken by a user in a browser to an authorisation endpoint specific to that service provider, as well as the connecting application.

To initiate the flow using the Linx service, a user makes a request to the public-facing API, submitting their API Key generated in the previous step. This key is validated behind the ‘user admin API’, and the corresponding encryption key is returned. A random ‘state’ is generated, and the ‘authorisation URL is constructed. The ‘state’ and ‘encryption key’ are stored in the database behind the scenes by the ‘token storage API’. The ‘encryption key’ is encrypted with the original ‘state’ value and stored with a hashed version of the ‘state’ in the database. The constructed authorisation URL is then returned to the user.

The user then needs to be redirected or navigate to the URL in a browser and authorise the application.

Generating and storing access tokens:

Once the user has authorised the app, the service provider sends a request containing a ‘code’ parameter to a ‘redirect’ or ‘callback’ URL. The ‘code’ and other client identifiers with the authorisation server are exchanged, and an access token is returned.

To achieve this in Linx, the Public API exposes a method to receive this callback request. The ‘state’ is validated via the ‘token storage API’ sub-service, which returns the encryption key. The ‘code’ is then sent to the ‘OAuth API’ sub-service to generate and return the access token. The returned access token is then encrypted using the value of the ‘encryption key’. Finally, the encrypted access token string is sent to the ‘token storage API’ and is stored in the database linked to the API key and service provider.

Retrieving access tokens:

To retrieve an access token to use in an external request, the user encryption key needs to be retrieved from the ‘user admin API’ by validating the submitted API key. The matching encrypted access token then needs to be retrieved from the ‘token storage API’. These two values are used in the decryption at runtime, and the decrypted access token value is returned to the user.

Security and privacy considerations

As I was planning on storing multiple user’s access tokens who I don’t know or who don’t know each other, I needed to ensure that any credentials used to act as a user was stored in a way that even if I had access to the Linx server as well as the database, I wouldn’t be able to use and gain access to their protected resources using the access token.

To mitigate this risk, confidential credentials are always either stored as a hashed value or encrypted and decrypted using runtime values that only a user or authorisation server will know.

If a malicious individual were to gain access to the server, there would be no way of acting on behalf of the user. They could only use the encrypted tokens if they already had that specific user’s password; and still, they would be unable to access any other user’s confidential data.

Furthermore, by having a front-facing API that manages the request to the ‘hidden’ surfaces, you increase your control over user actions. You can further improve this by deploying the services on isolated servers, adding another layer of security.

Data persistence

User related authentication data and access tokens are stored as encrypted values in a cloud database.

My particular implementation involves having all of the services in a single Solution hosted on a single server. Unfortunately, this means that the services all share a common database which adds a risk of complete breach.

To solve this, confidential credentials are always either stored as a hashed value that cannot be reverse-engineered or encrypted and decrypted using runtime values that only a user or authorisation server will know.

If a malicious individual were to gain access to the server, there would be no way of acting on behalf of the user using the encrypted tokens unless they already had that particular user’s password.

As mentioned above, the attack surface can be minimised by hosting separate database instances on isolated servers, adding an extra layer of security between each Linx service and the database.

I used a MySQL cloud database instance in this service. I selected it as I thought it would be the easiest for people to install. In addition, the solution is easily interchangeable with other database drivers.

Deployment considerations

While building this service, I realised pretty early on that this would need to be able to run locally in the Linx Designer. This was firstly for ease of development and on a live server for cases where HTTPS is required or deploying the service to a live environment with minimal effort. By splitting up the services, you can have the tested services running on your server while you run the other services locally while working on them.

Implementation issues and common pitfalls

One particular issue that I struggled with implementing my design was that values are encrypted and decrypted with runtime values. Unfortunately, this meant that any functionality related to using the tokens (refreshing tokens) is limited to a user’s request.

As much as I’ve tried to make this generic, each system has nuances which sometimes need to have explicit workarounds, and this means that adding new systems requires a slightly different approach and research. However, these differences are manageable with the Linx architecture.

Limitations

Currently, the service is capable of redirecting the user’s automatically. However, the API Key authorisation required for the requests needs to be added in the request, which the user cannot achieve by making a simple GET request in your browser. This would require some additional development on a front-end for this to be possible.

Refreshing access tokens is an issue due to the runtime design of the encryption. If users require tokens to be refreshed then they must handle that process externally.

Roadmap

The plan for this project is to hopefully implement a front-end with JavaScript or React, which will allow you to more efficiently manage tokens from a front-end portal without having to set up web service calls to handle user registration and token generation.

Afterthoughts

I’m pretty happy with the result, and I can now spin up different integrations or get my team members to quickly connect to the several APIs we interact with daily without having to go through all the access control.

Is it overkill? Sure. There are several 3rd party identity management service providers like Auth0 out there in the market. Still, in some cases, you may not want to add another external system to your security stack and want to roll your own in-house. For example, if you are developing integrations with Linx, it makes sense to have it all under one roof to control and customise your needs. Furthermore, as Linx does most of the heavy lifting, you don’t necessarily need too much knowledge of the underlying cryptography.

Half of the challenge for myself in developing this project was accomplishing the above purely in Linx.