A typical IT task is moving data around and making it available for consumption. Many different approaches can be used to facilitate the movement of data. This post will take a deeper look into different data transfer options and how you can automate them.

Automated file creation | Automated file consumption | Emailing data or files containing data | File and directory maintenance and FTP operations (FTP Uploads and downloads) | Data distribution via API | Message queues such as RabitMQ | Database to Database migrations

Linx works with most file types, including;

- Flat text files (txt)

- CSV

- Excel files

- XML files

- JSON files

- PDFs

Automated file creation

A standard method to distribute and move data is via files. Linx can create a process that will pull data from a data source such as a database, API, or even other files and write that data to a new file or append it to an existing file.

Example A:

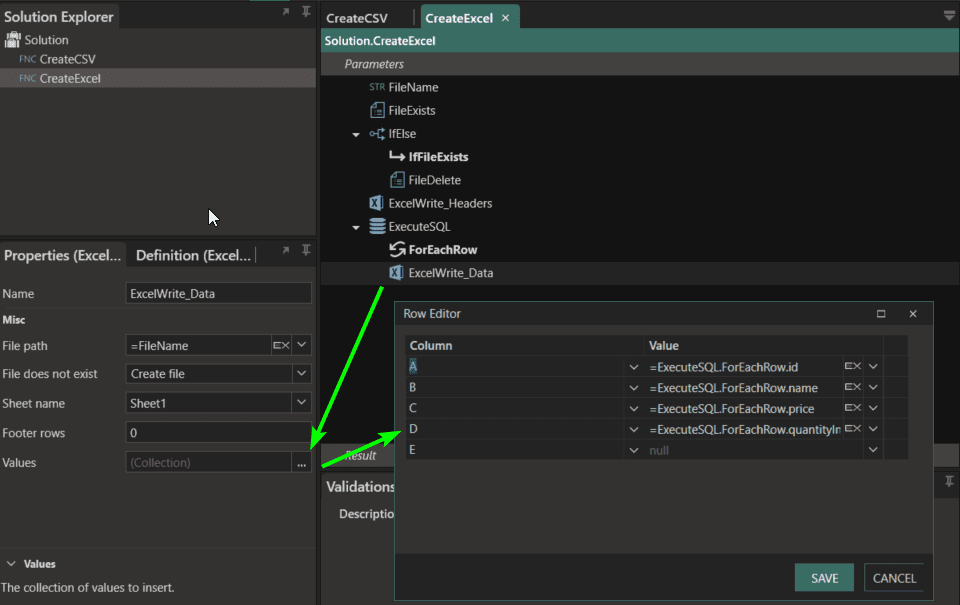

The below screenshot illustrates a function in Linx to:

- Check if a file exists with the given name (can be fully dynamic)

- If it does exist, delete the existing file

- Create a new Excel file with headers

- Retrieve data from a database

- Write each line retrieved into the excel file

The user can add additional logic and transformations to customize or format the data in a specific way. You can also add logic to ensure that only the selected or required data is written to the file.

Example B:

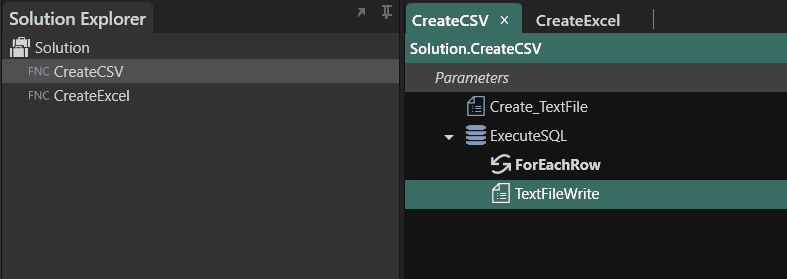

A function to create a CSV file can is even more straightforward. The below function will:

- Create a new text file with headers

- Retrieve data from a database

- Append each line to the file with CSV formatting

You can create most files using this method. XML and JSON files can be created using the XML or JSON plugins, which will help with formatting.

Additionally, you can automate file creation by using triggered events. For example, you may need to create a CSV file after a batch load or at a specific time of day. Linx allows processes to be executed at specific times using a timer service. Users can set timers to execute at an interval or specific time.

Read more: File operations – Tutorial

Automated file consumption

When files containing data are received, they are consumed and imported into a database or system. Linx can read from the most common file types, allowing you to create a function that will read your files using plugins. You can add logic to cater for specific cases depending on your requirements. Data can also be formatted or transformed using the built-in expression editor.

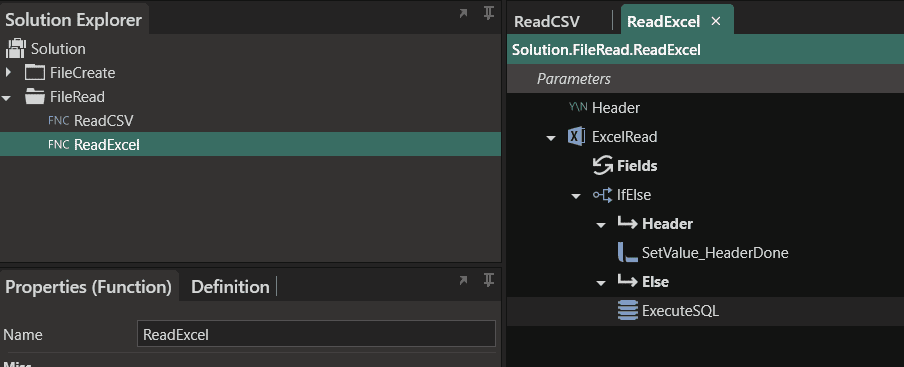

Example A:

The process below is a simple file reader that will load data into a SQL Table using a bulk copy function. Bulk copy is suitable for writing a large amount of data into a single table.

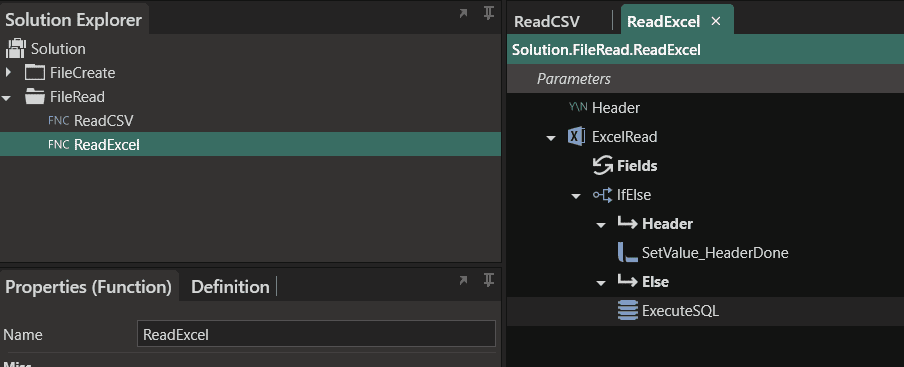

You can also write line-for-line into a database by using the ExecuteSQL function. To do so, create a function that does the following:

- Read each line from an Excel file

- Check for the header and skip it

- Read each line into a database table using an insert statement that was generated with Linx

Going one step further, the reading of files can be automated to execute as the file gets added to a folder. Linx will monitor a file and execute events using the DirectoryWatch function in this scenario. This function will watch a specific directory and can be set to monitor for file creation or changes. You can add custom logic to check for specific files or file types to execute your desired functionality, such as another function that will read the specific file.

Read more: Building a file reader solution

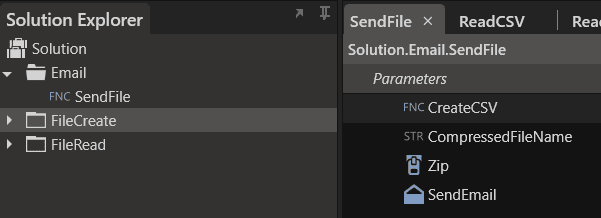

Emailing data or files

Data may be transferred via email, either by adding the data directly to the email body or via an attachment. The email can be triggered on an event such as a timer, directory watch, cron or an API call.

To do so, you can create a function to;

- Create a CSV file

- Compress the data file and add a password to the archive for security purposes

- Send the compressed archive as an attachment via SMTP

File and directory maintenance and FTP operations

Linx allows for complex file and folder management. You can:

- Move files

- Copy files

- List files

- Move directories

- Copy Directories

- Use Robocopy functionality

Using Linx’s archiving capabilities is especially useful when you are producing or consuming many files and need to do automated folder management. You can create a process to archive files and add logging entries into a data store.

Additionally, you can add custom logic to perform (complex or simple) folder and file management. This functionality is not limited to local resources. You can also use the FTP plugin to perform remote file and directory operations, including;

- FTP uploads and downloads

- FTP directory maintenance and management

- FTP file maintenance and management

Data distribution via API

Data can be distributed using an API. To do so, you will create the REST endpoints using the REST wizard, defining your endpoints as operations. You have complete control over your API, allowing you to read from a data source, manipulate the data and pass it back as a response body. A detailed guide on creating a REST API can be found here.

CRUD (Create, Read, Update, Delete) functionality is facilitated via API. You can create an API that will make data available to your organization. You will set the security according to your requirements and Linx can generate documentation in the form of Swagger or Redocly documentation.

This video below illustrates how to create a simple API with an endpoint to retrieve the data. The data retrieval endpoint will read data from a database and will return the data in JSON format.

Message queues

Message queues (MQ) are a powerful way to facilitate data transmission between applications. Message queues facilitate the asynchronous transfer of data between two different applications.

There are many types of message queues, but they all work similarly. They allow you to send messages from one application to another without waiting for a response. One application sends the messages, and the other reads them and processes them when it has the time.

The RabbitMQ plugin lets you easily read from RabbitMQ, write RabbitMQ and listen to a queue on a RabbitMQ server to trigger an event when a new message arrives. If you opt for a Kafka server, the Linx Kafka service can connect to Kafka-compliant servers (brokers) to receive specific topic-based messages for use in your Linx solution.

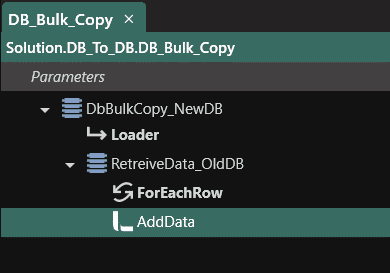

Database to Database migrations

Extracting data from one database to be loaded on another can be accomplished by using the database plugin.

The below process illustrates how that can be done:

- A bulk copy function is used to write all the data quickly and efficiently

- The data is pulled from the old database via an ExecuteSQL function

- Data is added to the Loader. You can change specific values or apply transformations or business rules before adding the data to the loader.

Alternatively, you can read data from one database and add it to another using the ExecuteSQL component. You can apply transformations and checks on values on a record-by-record basis. Specific values can also be selected to be added to specific tables, which is especially useful when the schemas of the databases are different.

Data can be read from and written to any :

- MSSQL

- MySQL

- Oracle

- PostgreSQL

- MongoDB or other

When combined with the scheduling functionality (timers and crons) you can create an automated data migration system or application that will apply custom logic and transformations on your data based on your requirements.

Additionally, you can trigger the data movement function via a REST API call. This will allow for the data to be transferred when another system or remote control process dictates e.g. creating a data migration once reconciliations are completed in the source system.