Retrieving data from a source, ensuring it suits business requirements, and moving that data into a target data source is critical to any data strategy. Low-code tools can help create robust and flexible ETL processes that automate your data loading.

Low-Code ETL

Building an ETL process usually has three major steps. These three steps can be implemented in subprocesses or one process. The benefit of dividing them into sub-tasks or sub-processes is that they are more manageable, easier to debug, and can be executed separately, thus making them rerunnable.

Extract

Data can be extracted from different data sources:

1. Databases

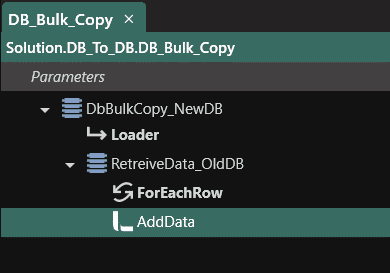

Low-code tools often allow for easy data consumption from databases. In Linx, extracting data from one database to be loaded on another can be accomplished by using the database plugin.

The below process illustrates how that can be done:

- A bulk copy function is used to write all the data quickly and efficiently to a target database table, such as a staging table

- The data is pulled from the source database via an ExecuteSQL function

- Data is added to the Loader. You can change specific values or apply transformations or business rules before adding the data to the loader.

Data can be read from and written to the most popular database formats, including: MSSQL, MySQL, Oracle, PostgreSQL, MongoDB or other.

Further reading;

2. Files

Using a low-code tool such as Linx can import files quickly with the built-in file read function. This function allows you to specify the file location, which can be dynamically configured. If you are reading a CSV or flat file. You can specify the columns, import them from the file’s header, and configure each field’s output type. Doing so gives you full control over how each field is imported.

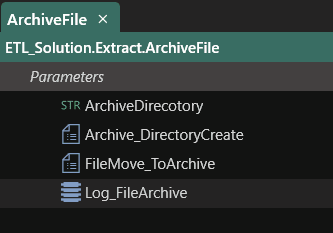

PDFs and Excel files can be read similarly. If you are a Google user, Linx has a plugin to interact with Google Sheets. After importing a file, you can archive it with the file management functionality. This includes moving the file to an archive folder, directory management and more. A simple archive process will look like this:

Further reading; File operations (tutorial)

3. APIs

With the popularity of APIs, you may need to retrieve data from one. You can quickly call a REST or SOAP API with Linx via the CallRESTEndpoint function.

Once you make the API Call, you can bind the response body to a type, making it far more manageable to interact with specific values or lists in the response body. Responses can be XML, JSON or string. You have full control over the data’s use after receiving it – whether you need to access specific values or the entire data set.

4. More options

Other data retrieval options available in Linx include:

Once the data is extracted from the data source, you can manipulate and pass it around in various ways.

Staging the data

You may need to load data into a staging data store. This can quickly be done using either a bulk data loader (DbBulkCopy) or loading the data line for line.

Transform

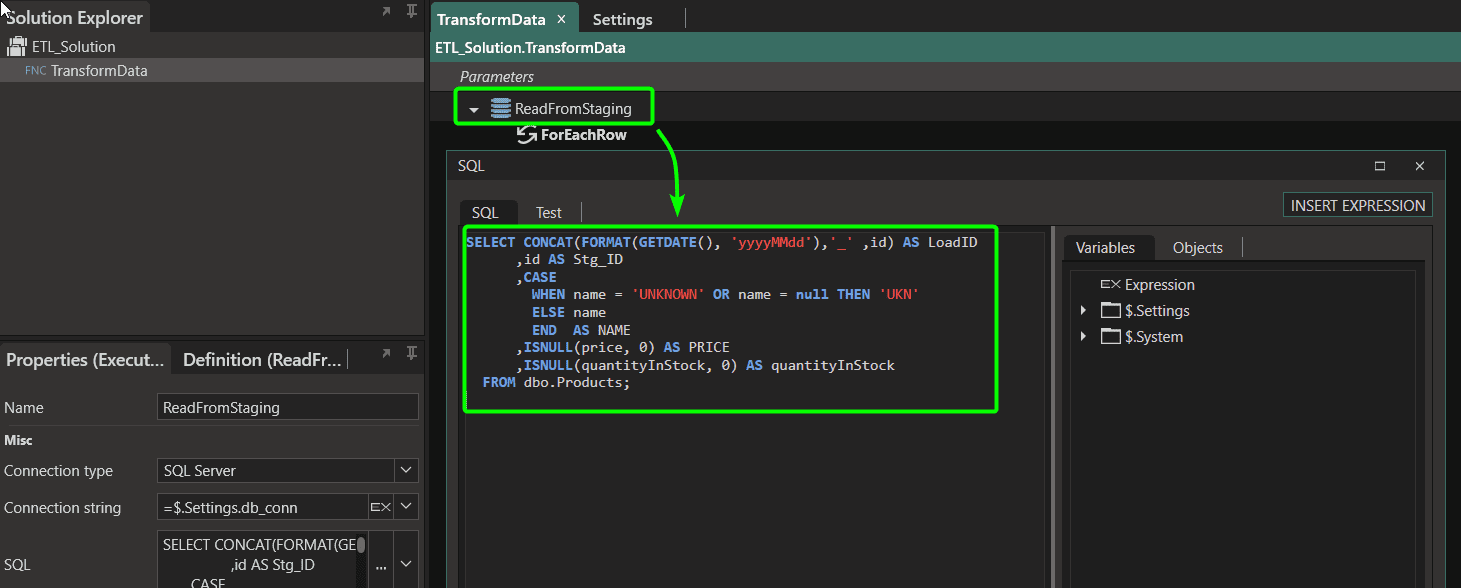

Transforming data can be done in various ways. Using Linx you can add transformations by using custom SQL, calling a stored procedure or applying transformation rules via the logic you implement.

Custom SQL

Using the ExecuteSQL component, you can create simple or complex SQL scripts to be executed. You can use T-SQL (or equivalent) queries and stored procedures directly in the ExecuteSQL function. This means that transformational logic can be applied when data is retrieved from your Staging Database.

You can also read from a view, or stored procedure, meaning you can apply transformations in your database. Calling stored procedures allows for parameter passing. Executing a stored procedure is simplified by using the ExecuteStoredProcedure function.

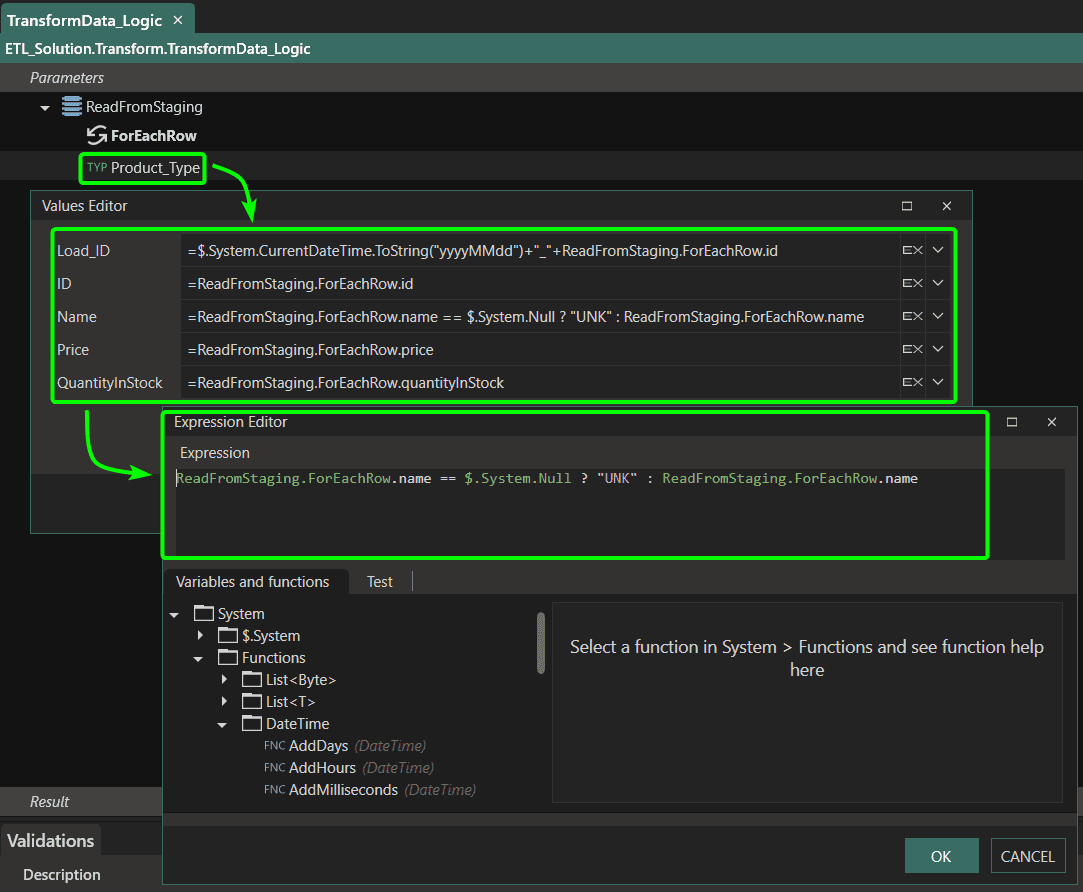

Custom Logic

You can apply custom logic and transformations in Linx directly, meaning that you do not need to use or have a deep knowledge of SQL. These transformations can be applied directly to values retrieved from the data source. You can choose to navigate the list of records or data objects. These objects can be bound to a type created by you or imported from XML or JSON objects.

Further reading; How to work with conditional statements within expressions

Load

Data needs to be loaded to the target data store. That data store can be a database (SQL or Mongo) or data store in the cloud that is accessible via API. With Linx you can quickly write to a target database with multiple options:

- Database Write via SQL. With the ExecuteSQL function, you can create and execute your SQL queries. You can create simple or complex SQL scripts.

- Execute Stored Procedures. Pass parameters to insert values via a stored procedure that you create in your SQL Database. You can use the same stored procedure to facilitate data transformations upon insertion.

- Bulk copy, This allows you to efficiently copy a large number of rows to a table in a SQL Server or Oracle database.

- Mongo DB Write. Linx has a plugin function that performs write operations on a Mongo database.

- API Call . Some datastores to require data to e imported via SOAP or REST API call. You can quickly call a REST or SOAP API with Linx via the CallRESTEndpoint function.

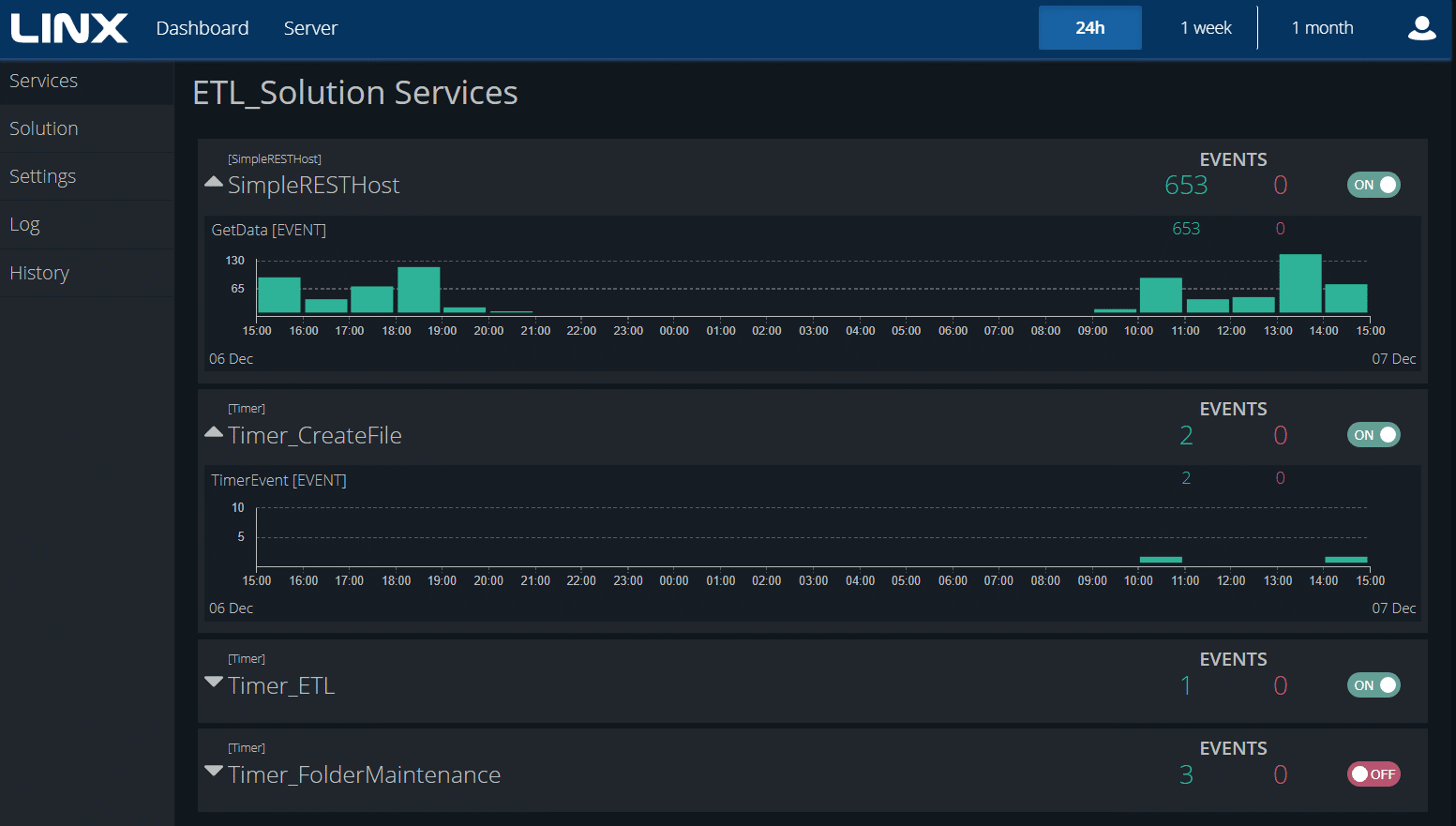

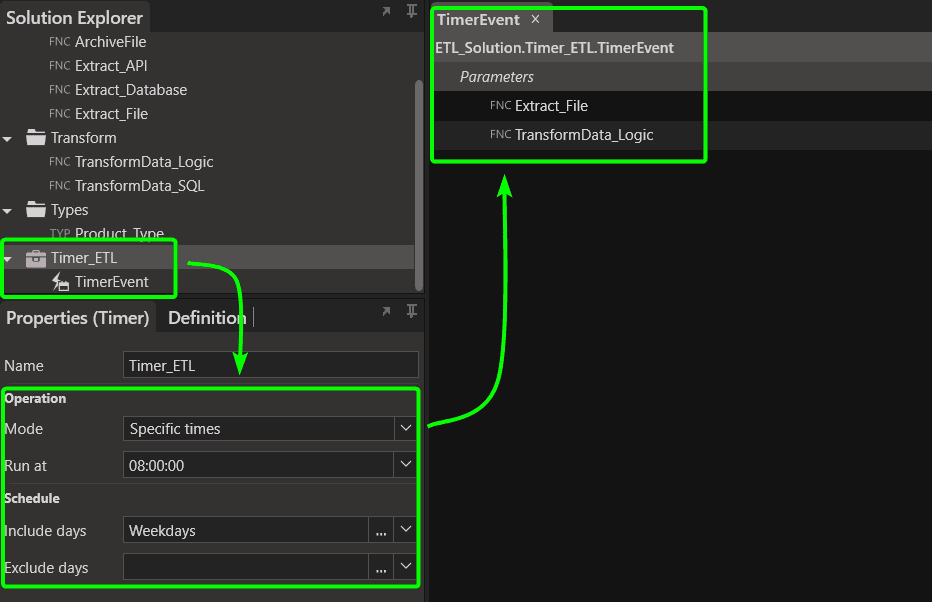

Orchestrating the ETL

When the ETL process is built, you can execute it based on certain conditions or on a schedule. For example, a file may need to be loaded once it is dropped in a directory, or data to be loaded every morning at 8 AM.

Linx allows you to monitor specific directories for activity and kick off file loads when a new file is added to a folder or directory. Other directory activities such as file moving, copying, and maintenance are also available, allowing for robust archiving and folder maintenance operations.

Using a timer service, you can schedule functions and processes to be executed at specific times or intervals. You configure the timer according to your requirements and add in created events or custom logic if a specific operation needs to be executed based on pre-defined rules.

For example, everyday data must be loaded, and as part of the data load, a file must be imported, however, the file may only be imported if it is available in the directory.

Low-code ELT

Since the significant difference between ETL and ELT is the order in which steps are executed, the methods mentioned above also apply to ELT. The main difference will be that Transformations will be applied to the loaded data. You can do this in multiple ways:

- By using SQL to apply transformational rules on data, typically in views or stored procedures

- Applying transformations on values before they are written to a file or sent in a response body of an API

Data delivery and distribution can also be automated with Linx. You can:

- Create files such as CSV, text, Excel, XML or JSON

- Create REST APIs

Data Distribution

Once data has been loaded into your datastore, you may need to make it available for consumers or other applications. There are multiple ways you can use Linx to facilitate this:

1. Generate files

The prebuilt functionality in Linx allows you to easily create files with the data obtained from any source (database, API calls etc.) Data, specific fields, or logic-specific values can be transformed, altered, or formatted before writing those records to the file. You can create flat files such as text or CSV, Excel documents, PDFs or XML or JSON Files.

2. REST API Creation

Linx allows for robust REST API creation. The platform offers two ways to do so;

Design First API

Create your API using an OpenAPI 3.0 specification that you define. When you import the specification into a RESTHost function, all your endpoints will be created as events. You can use any of the functions in Linx to define logic and add functionality for your endpoints.

Build and Host API

If you do not need to build from a specification, create the REST endpoints using the API Wizard. Here you will define your endpoints as operations and have full control over how you want to populate functionality You have full control over security. Linx can also generate API documentation for you in either Swagger or Redocly format. This documentation is hosted with the API and is available for your consumers.

Once development is complete, the API is hosted on a Linx Server, where monitoring and logging are provided as standard.

Step-by-step API guides: Code-first or Design-first

3. FTP file movement

Linx allows for complex file and folder management. This can be combined with custom logic to perform the highly specific file transfer. You can move, copy or delete files and folders. This functionality is not limited to local resources, you can also use the FTP plugin to perform remote file and directory operations. This includes:

- FTP uploads and downloads

- FTP directory maintenance and management

- FTP file maintenance and management

Application Hosting

Completed applications are deployed and hosted on a Linx Server. The Server is a Windows Service that hosts and manages your Linx solutions and web services. You can install Linx Server on the hardware of your choice (on-premise or cloud) with monitoring, metrics and logging as standard. Automated processes such as timers and event monitors will automatically be executed and run on the server once switched on.