Any organisation wanting to pursue digital transformation understands the value of good-quality data. Data is akin to digital gold, and it is immensely important to strategic decision-making. Ensuring that your data or BI team has everything they need is part of the challenge.

Quick Links:

Data integration

Organizations often have data stored in multiple systems and formats. Integrating data from different sources, such as databases, spreadsheets, and legacy systems, can be complex and time-consuming.

Data Silos

Data silos occur when data is stored in isolated systems or departments within an organization, hindering access, sharing, and integration. Silos restrict collaboration, data analysis, and decision-making. They result from organizational or technological barriers like incompatible formats, separate databases, or limited sharing protocols. Breaking down silos is essential for a unified view of data, cross-functional collaboration, and maximizing information assets.

Data integration solved

There is no simple answer to this problem. You must do the leg work to ensure all the data is accessible. This is typically done by implementing a centralized data repository or middleware applications can be built to facilitate data flow. Projects such as this can be costly and take a substantial amount of time.

Moving data into a centralised database

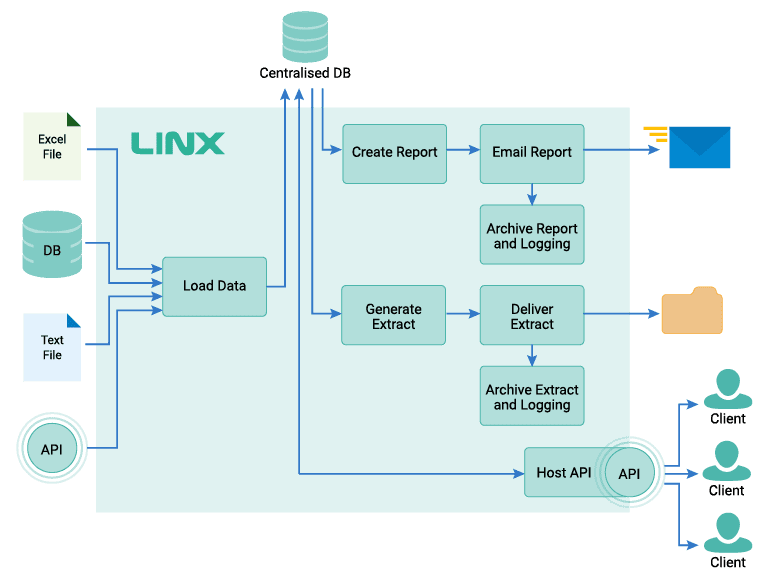

Using Linx, Data can be imported from files, APIs, existing systems or anything in between. Once data is centralised, getting the data for that next big report or extract will be much easier.

Making data accessible via middleware

Another option is to build a middleware application that facilitates the flow and transfer of data. Middleware applications will provide the data in the required format, at the required time.

Middleware can make the data available via:

- Hosting an API

- Scheduled data transfer into a specific store

- Generation and transfer of a file containing a data extract

Using a specialised platform to build middleware can speed up the process tremendously as the platform will have standardised connectors, orchestration options and built-in hosting.

Further reading: A middleware use-case

Data quality

Poor data quality can significantly impact the effectiveness of BI initiatives. Only accurate, complete, and consistent data can lead to better decision-making.

Data quality can often be compromised during data loading, when transformations are applied, during governance (human error), and anywhere in between.

Data quality tips

Here are a few tips that will assist you with better-quality data:

- Make sure the mappings are correct. Ensure that source fields are mapped to the correct target fields. This can be made easier by using a tool that allows for visual or structural mapping.

- Ensure the right transformations are applied at the right place. This is especially important when working with numbers as even a tiny calculation error can have serious downstream effects.

- There is power in simplicity. Try to avoid having transformations or mappings done in different places during the process. It often helps to apply transformations and mappings at specific points in your process.

- Apply data validations and raise validation breaches. Build a validation step in your application that checks that fields conform to a specific pattern. Include a validation breach report that can be compiled and sent to a person or team responsible for resolving data quality issues.

- Use mappings where possible. By using mapping tables and limiting fields to only one of several options, you can have more control over data quality and remove ambiguity. This works incredibly well where data can be pre-defined. For example, products, specific departments, or items that can be categorised.

Data governance

Proper data governance practices are crucial for ensuring data accuracy, security, and compliance. Organizations may struggle to maintain data consistency, protect sensitive information, and adhere to regulatory requirements without a well-defined governance framework.

Data governance defined

Data governance is the management and control of an organization’s data assets. It involves defining policies and procedures to ensure data availability, integrity, confidentiality, and quality. Its objectives are to promote accountability, improve data quality, mitigate risks, and ensure compliance with regulations and standards.

Data governance tips

A good step towards data governance is implementing a mandatory check and approval step. A recommendation will be to send the data, preview report or preview extract, to the data owner to be reviewed before the data becomes available via distribution channels. You can highlight any validation breaches and if/how they have been fixed.

Additional precautions can be taken by adding additional validations that will be applied to the data. Specific checks on specific fields to ensure that they conform to what is expected is a good start. When using a specialised platform, you can incorporate these checks into your logic and apply them to each individual field.

Data accessibility

Providing access to relevant data to the right people at the right time is essential for effective BI. Ensuring data accessibility across different departments, roles, and locations can be challenging, especially in organizations with a large and diverse user base. Making data accessible can be especially tricky when it comes to skill and time requirements.

Data accessibility options

You can make data available in many ways:

- Data Extract via File: A classic way of providing and distributing data is via extracts or automated files. These files can be generated on a schedule and can be moved to a specific location for the client to retrieve.

- API: An API can be created to provide users and other applications access to specific datasets. APIs are great for security, they scale well, and they are also excellent for providing data when the requestor needs it. APIs provide direct data access without security and data integrity issues. You can provide data per request to those with access to it in a curated and controlled manner.

- Reports: The most common data distribution method is to build reports sent to interested parties. These reports can be pdf reports, excel spreadsheets or even flat files that contain data summaries. Reports are better suited to aggregate data sets that give the user an overview of the data.

- User Interface: Data can be provided via a user interface (front-end). You can use tools like PowertBI, Stadium, Qlickview or even a custom-built website to provide this access.

- Direct data access: Curated views or stored procedures are a classic way to provide data to users. However, note that there are multiple risks associated with giving users direct access to your database or data source. An API is recommended to be used as it is more secure, easier to integrate with, and has less associated risk.

Data accessibility made easy through tools

Often, accessibility is limited by available skills, time or budget. Here specialised tools and platforms can be of great benefit. If you need to make data available via API, look for a tool to help you easily create APIs and host them. If you need to create files and email them, look for a tool that can do that. As a start, a specialist low-code platform can assist in solving these problems at speed without the additional requirement of writing and implementing code.

Scalability

As organizations grow and data volumes increase, scalability becomes a challenge. BI systems must handle large amounts of data, perform complex calculations, and deliver timely insights, which can strain system resources.

The best way to prepare for this challenge is to build a system with scalability in mind. However, too often, BI teams must deal with legacy or POC (Proof of Concept) systems and make changes to allow them to scale to large quantities of data.

Tips to make your solution scale better

- Optimize Data Models: Review and optimize your data models to enhance performance and scalability. Identify and eliminate bottlenecks, optimize queries, and ensure efficient data indexing. Denormalizing or aggregating data where appropriate can improve query response times.

- Data Partitioning: Partition large datasets into smaller, more manageable subsets. By dividing data based on specific criteria (e.g., time, geography, or customer segments), you can improve query performance and reduce the load on the system.

- Parallel Processing: Leverage parallel processing techniques to distribute query execution across multiple cores or nodes. Distributed databases can achieve faster and scalable processing.

- Performance Monitoring and Optimization: Monitor system performance, query execution times, and resource utilization. Identify and address bottlenecks proactively by analyzing system logs, monitoring tools, and query profiling. Regularly optimize queries, indexes, and data models based on performance insights. Make performance modelling easier by building it into your process. Log execution start and end times, amount of records processed and so forth to later analyse to identify slow parts of your process.

User adoption

BI solutions are only effective if users embrace them and integrate them into their decision-making processes. Low user adoption rates may occur due to factors such as poor user experience, lack of training, or resistance to change within the organization.

Make it part of the user’s process

There is no easy answer to solve user adoption; however, when making it easy for the user to use your data or reports, the likelihood of them using it will increase. You can make your reports part of their process by:

- Sending it to them on a platform that is part of their workflow. Email, Slack, Teams. Integrations like these can be easily built and facilitated via iPaaS or low-code platforms.

- If you use a reporting platform like PowerBI, send users the links via a notification (email or messaging platform).

- Ensure reports or data are easy to use, well organized and intuitive. Creating your reports as Excel files can assist with this, depending on the user expectations and their daily workflow.

Evolving business needs

Business needs and requirements are constantly changing. BI systems must be flexible and adaptable to meet evolving demands, which may involve modifying data models, adding new data sources, or adjusting analytical processes.

There is no magical solution for evolving business needs and requirements. However, it can be managed by using a platform that allows you to make changes rapidly, quickly build new processes and easily analyze existing processes.

Low-code platforms are a good option if you need to develop with agility because:

- Ease of understanding of a process due to the visual nature of low-code platforms. This makes enhancement and maintenance much more manageable.

- Rapid application and process development means you can keep up with new requirements and expectations.

- Ease of use ensures that you do not need a team of senior developers to implement more complex functionality.

- Speed of implementation makes it easy to build a proof of concept process that can quickly be tested before time is invested in building the whole process.

- Built-in application deployment and hosting ensure that your changes can go live as soon as they are ready with minimal effort.

Low-code platforms are great for an Agile development methodology as you can easily create smaller (or large) processes at speed.

Cost considerations

Implementing and maintaining a BI infrastructure can be costly, especially for smaller organizations with limited resources. Licensing fees, hardware requirements, data storage costs, and ongoing maintenance expenses can strain budgets. Above and beyond that, it can be expensive to hire a development team to write code that will load, transform and deliver your data.

Choosing the right toolset will make all the difference regarding cost. Be sure you choose a toolset that:

- Works for your budget.

- Allows you to build complex, bespoke and feature-rich processes.

- Can be utilised with the skillsets available to you.

- Allows you to meet your timeline goals.

Solving Cost

It can be tricky to solve costs as, with any change, there is always a sacrifice to be made. A low-code platform can:

- Save on skills costs as these platforms are often easier to use and require less technical knowledge (less senior developers).

- Save on time developing processes at speed can save time when developing, testing and hosting.

- Save on infrastructure costs. It can be expensive to deploy and host your solution as this also requires specific skills and sometimes specific tools.

- Save on maintenance and enhancements costs in the long run as processes developed by low-code platforms are easier to analyse, understand and improve.

How Linx solves BI

Linx allows you to build holistic, bespoke and flexible BI solutions at speed as the entire process can be built, from data loading to delivery, in a single platform. Because Linx works as low-code coding – not workflow – there is an inherent amount of freedom and flexibility to cover the bespoke nature of BI applications.

With Linx, you can drag, drop and configure components to;

- Build data pipelines and ETLs

- Build monitoring and logging processes

- Generate reports

- Excel

- Text or flat (CSV, JSON, XML and more)

- Build data and report delivery processes

- APIs

- FTP

- Solve orchestration by setting schedules, timers or event monitors

Being a platform, your solution is hosted on a Linx server, without the need to figure out infrastructure or deployment processes.